-

Notifications

You must be signed in to change notification settings - Fork 1.3k

Closed

Milestone

Description

snapshot housekeeping needs

[ ] refractoring AND

[ ] fixing snapshot_store_ref garbage AND

[ ] XenServer garbage on Prim Stor.

For details please see my comment: #3969 (comment)

Problem 1

- When snapshots are deleted and the GC runs, the PRIMARY references (role_type=rpimary) are left in snapshot_store_ref table forever - when we successfully create a snapshot, we remove it from the Primary storage (we copy/export full snapshot to Secondary Storage and it's removed from Primary immediately) - so we also then need to remove the PRIMARY reference at the same time (like it's done for i.e. XenServer - but has XS has other issues, read below)

Reproduce the issue:

- set storage.cleanup.interval=60 (seconds),

- keep the defaults snapshot.backup.to.secondary=TRUE,

- spin a VM (1 or more volumes), VMware or KVM

- create snapshots

- delete snapshots

- wait for GC to kicks in: tail -f /var/log/cloudstack/management/management-server.log | grep "StorageManager-Scavenger"

- check the snapshot_store_ref table - rows are left where role_type=primary

Problem 2

- XenServer snapshots are chained (each new snap is a child of the previous parent snapshot) - this is NOT how it works for KVM/VMware, thus a bit different flow needed for XS

- As it seems, we always export the "full" snapshots to the Secondary STorage (see below steps to reproduce) so the parent/child relation in DB becomes nonsense (should we remove it?)

- Due to the parent/child relation, previous snaps can not be removed (the code and how it's implemented, not really on XS side - on XS side you can simply delete any snap and the XS will do it's coalescing in the background i.e. not immediately)

- The current code (as of PR 3969) will actually remove all parents post-mortem after all the child snaps are removed - i.e. it will remove all IMAGE reference records from the snapshots_store_ref gable when the last (child) is deleted: the last snap is deleted on Primary Storage (older/parent snaps are NOT, since their PRIMARY references is missing at that moment, read below...) and all snaps in the chain (all parents of that last child snap) are removed successfully also on the Secondary storage 👍 - so here there is garbage left on Primary Storage (even though ACS DB tells otherwise) 👎

- How code currently works (observed by testing!): Currently, when a new snap is created for XS volume, the previous snap is deleted - that is, the snap itself on Primary is not deleted (and as it seems it should be) but the PRIMARY reference in snap_store_ref table is removed for the previous snap, so later GC doesn't find it and it never gets deleted from the Primary storage.

- How it should work (as it seems?): when the previous snap is deleted (after new one is created and backed to the Secondary Storage successfully) - then ACS should really delete it on the Primary Storage (ignore the nonsense parent/child in code, as it CAN be deleted in i.e. XenCenter) - and continue (as before) to remove the PRIMARY reference in snap_store_ref table - this is how it works with KVM/VMware (once we move the template to Sec Stor, we delete it on Primary Storage)

-- Idea: why not delete even the last snap from the Primary Storage - any "create template, create volume" operations work by reading the snap from the Secondary Storage - never from Primary - so we do NOT need it - same as with KVM and VMware) - this all assuming we are backing up the snap to Secondary Storage

Reproduce the issue:

- Spin XenServer VM - same behaviour for ROOT/DATA volume - but easier to observe things on DATA - so have 1 DATA volume attached to a VM

- write 10MB of data to volume (dd... bs=10M count=1), create "snap1" (use that name), write a total of 20MB (dd... bs=20M count=1), create "snap2", write a total of 30MB (dd... bs=30M count=1), create "snap3"

- We now have snap1 (parent to) snap2 (parent to) snap3 (last child) - before and after each snap creation, observe the snapshot_store_ref records and observe how the PRIMARY reference is deleted for the previous snap when a new one is created - now we have PRIMARY ref only for "snap3"

- Delete snap1 - even though the API/deletion is successful, in the mgmt logs you'll see that it reports that this snap has children and you can observe nothing is deleted in Primary Storage (use XenCenter to confirm the existence of the "snap1" which is the name we have the snap during its creation) and also observe nothing is removed on the Secondary Storage - only the main snap records in the "snapshots" table is marked as DESTROYED and it's IMAGE references in snap_store_ref is also marked as DESTROYED

- Delete snap2 - identical thing happens as with snap1 - it has a child (snap3), so nothing is really deleted

- Delete snap3 - observe how it is deleted properly - all 3 images are immediately deleted on the Secondary Storage BUT only the snap3 is deleted on the Primary storage

- This leaves "snap1" and "snap2" left on the Primary STorage (confirm via XenCenter) - as a workaround we can now manually delete those 2 snaps via XenCenter (in any order) and they will be eventually removed in the VHD chain (takes some time)

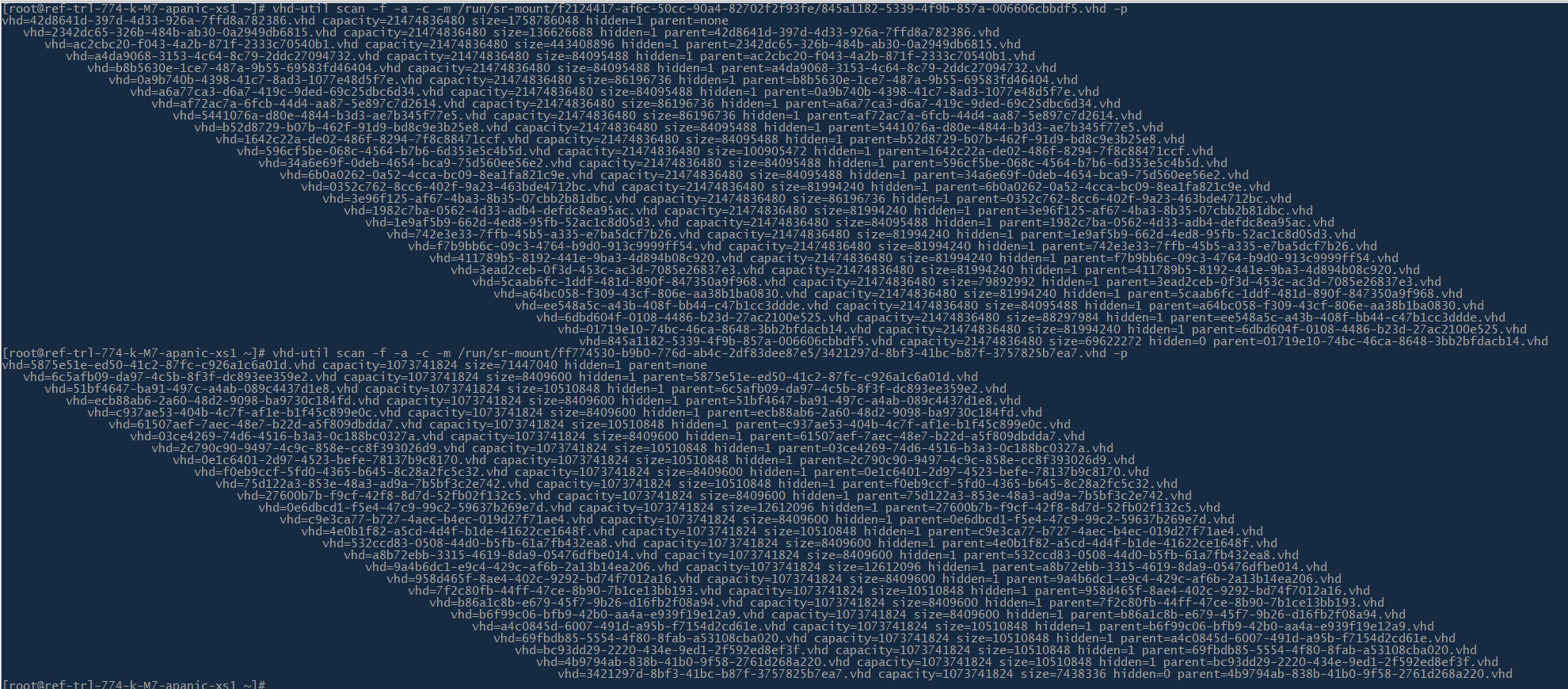

-- after 2 snaps are manually removed via XenCetner, internal coalescing of snaps can be monitored with: watch -n 10 vhd-util scan -f -a -c -m /run/sr-mount/ff774530-b9b0-776d-ab4c-2df83dee87e5/3421297d-8bf3-41bc-b87f-3757825b7ea7.vhd -p (sr-mount//.vhd - it takes a few minutes (or more if large volume?)

Some graphic (2 XS volumes and their snaps):

26 hourly snaps (even though max.delta.snaps=16) - results in the 26-snaps-long chain on XS side:

GabrielBrascher