VC4VG: Optimizing Video Captions for Text-to-Video Generation

Yang Du*, Zhuoran Lin*, Kaiqiang Song*, Biao Wang, Zhicheng Zheng, Tiezheng Ge, Bo Zheng, Qin Jin

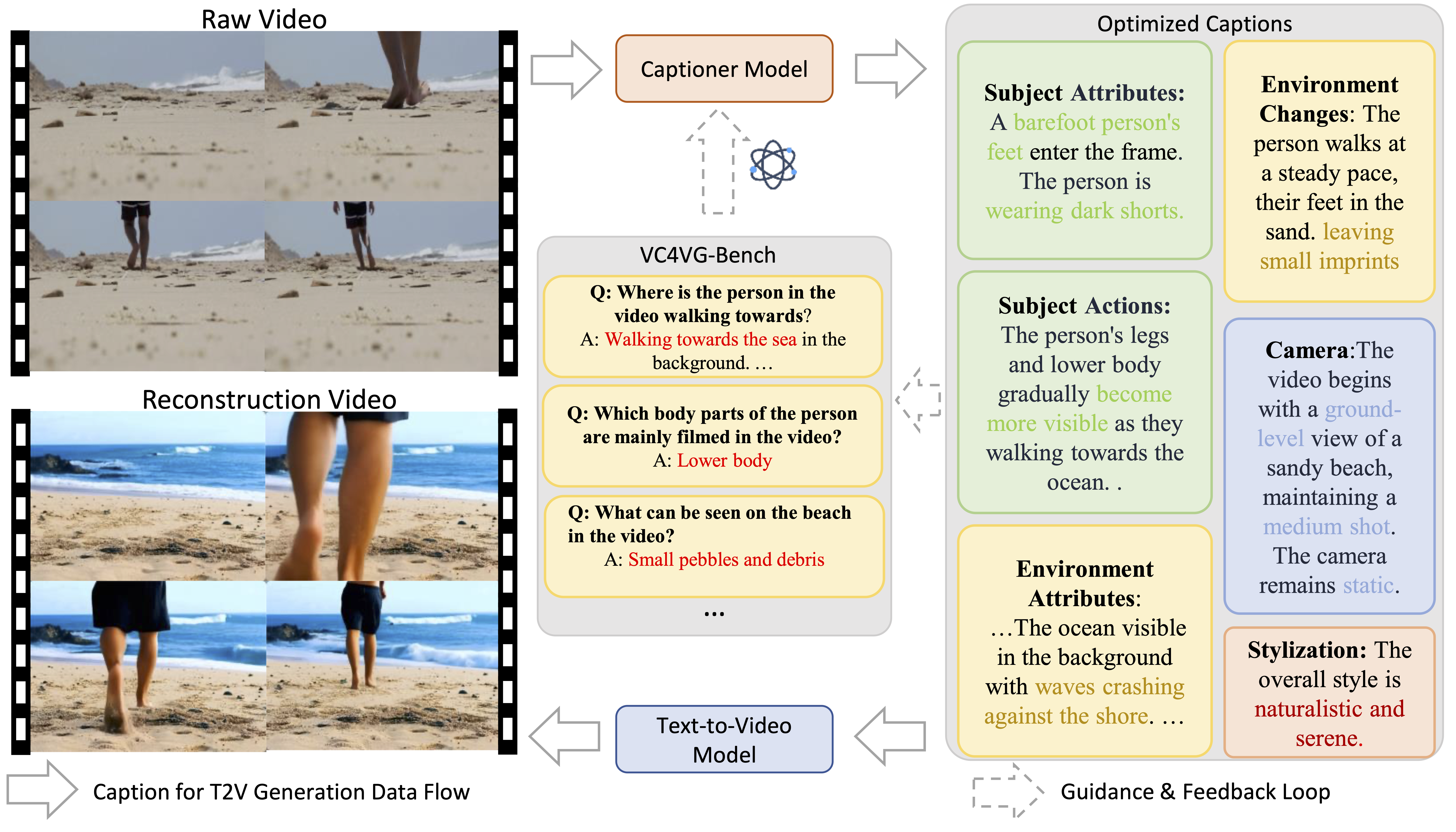

Text-to-video (T2V) generation models rely on high-quality video-text training sets for enhancing instruction-following capabilities and improving the overall quality of generated videos. Existing video captioning work lacks a systematic optimization framework designed specifically from the perspective of T2V generation needs.

- Decompose video captioning into five key dimensions crucial for video reconstruction. We break down video captioning into Subject Attributes, Subject Actions, Environment Attributes, Environment Changes, Camera, and Stylization.

- Propose VC4VG-Bench, a new automatic benchmark with 1,000 QA pairs. This benchmark is designed to evaluate captions based on their suitability for T2V generation.

- Validate our optimization framework through a proof-of-concept and T2V fine-tuning experiments. We show that fine-tuning a T2V model with captions generated by our framework leads to higher-quality video generation.

Our experiments demonstrate that:

- VC4VG can guide model optimization to generate higher quality video captions. Our proof-of-concept model developed through VC4VG, shows the effectiveness of our training strategy.

- Training a T2V model with VC4VG's higher-quality captions directly leads to higher-quality video generation. This is validated by both automated metrics and human evaluation.

- VC4VG-Bench effectively evaluates captions with generation-oriented metrics. It achieves over 80% consistency with human judgment through a dual-reference human annotation strategy.

If you find our work useful, please consider citing our paper:

@misc{du2025vc4vg,

title={VC4VG: Optimizing Video Captions for Text-to-Video Generation},

author={Yang Du and Zhuoran Lin and Kaiqiang Song and Biao Wang and Zhicheng Zheng and Tiezheng Ge and Bo Zheng and Qin Jin},

year={2025},

eprint={2510.24134},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2510.24134}

}