SAES is a Python library designed to analyse and compare the performance of stochastic algorithms (e.g. metaheuristics and some machine learning techniques) on multiple problems.

The current version of the tool offers the following capabilities:

-

Seamless CSV data processing

- Import and preprocess experiment results effortlessly.

- Handle datasets of varying sizes with ease.

-

Statistical analysis

- Non-parametric tests:

- Friedman test

- Friedman aligned-rank test

- Quade test

- Wilcoxon signed-rank test

- Parametric tests:

- T-Test

- Anova

- Post hoc analysis:

- Nemenyi test (critical distance)

- Non-parametric tests:

-

Report generation

- Automated LaTeX reports with the following types of tables:

- Median table

- Median table with Friedman test

- Median table with Wilcoxon pairwise test (pivot-based)

- Pairwise Wilcoxon test table (1-to-1 comparison)

- Friedman P-Values table (for multiple friedman test variations)

- Mean table with Anova test

- Mean table with T-Test pairewise test (pivot-based)

- Pairwise T-Test table (1-to-1 comparison)

- Automated LaTeX reports with the following types of tables:

-

Visualization

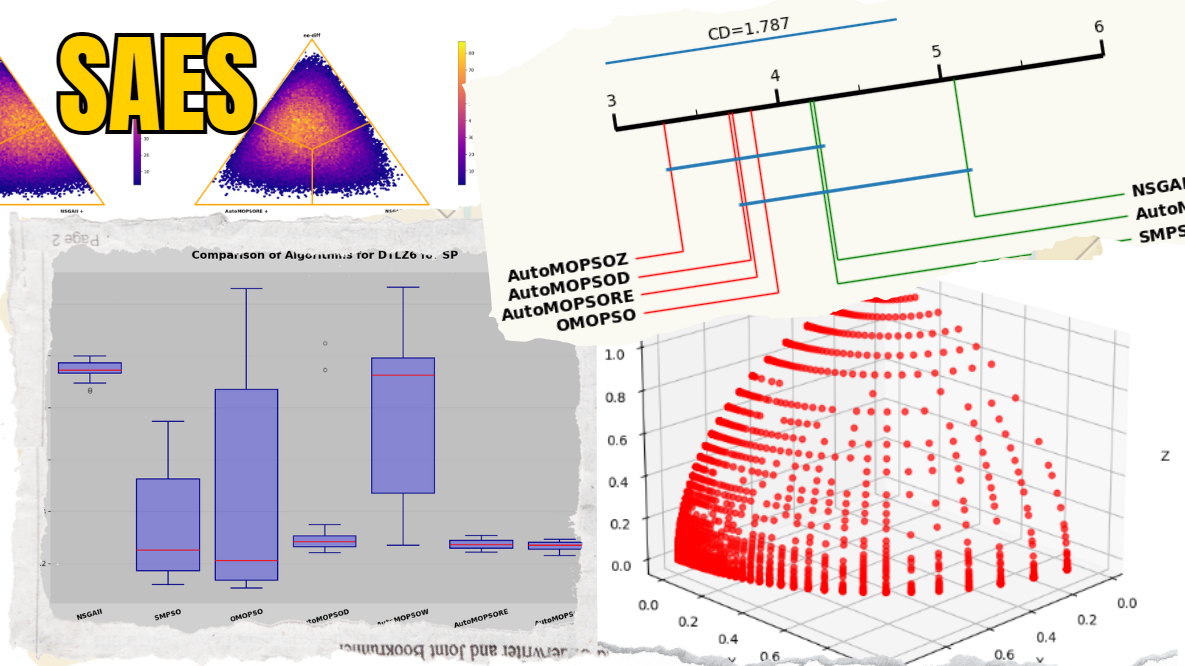

- Boxplot graphs for algorithm comparison.

- Critical distance plots for statistical significance.

- Multiobjetive Pareto Front plots in the Multiobjective module.

- HTML generation for intuitive analysis.

- Bayesian Posterior Plot for probabilistic comparison of algorithm performance.

- Violin Plot for algorithm performance distribution.

- Histogram Plot for visualizing the distribution of algorithm performance.

-

CL Interface

- Command Line feature to access the different

SAESfunctions

- Command Line feature to access the different

This tool is aimed at researchers and developers interested in algorithm benchmarking studies for artificial intelligence, optimization, machine learning, and more.

SAES is a new project that is in its early stages of development. Feel free to open issues for comments, suggestions and bug reports.

A stochastic algorithm is an algorithm that incorporates randomness as part of its logic. This randomness leads to variability in outcomes even when applied to the same problem with the same initial conditions. Stochastic algorithms are widely used in various fields, including optimization, machine learning, and simulation, due to their ability to explore larger solution spaces and avoid local optima. Analyzing and comparing stochastic algorithms pose challenges due to their inherent randomness due to the fact that single run does not provide a complete picture of its performance; instead, multiple runs are necessary to capture the distribution of possible outcomes. This variability necessitates a statistical-based methodology based on descriptive (mean, median, standard deviation, ...) and inferential (hypothesis testing) statistics and visualization.

SAES assumes that the results of comparative study between a number of algorithms is provided in a CSV file with this scheme:

- Algorithm (string): Algorithm name.

- Instance (string): Instance name.

- MetricName (string): Name of the quality metric used to evaluate the algorithm performace on the instance.

- ExecutionId (integer): Unique identifier for each algorithm run .

- MetricValue (double): Value of the metric corresponding to the run.

| Algorithm | Instance | MetricName | ExecutionId | MetricValue |

|---|---|---|---|---|

| SVM | Iris | Accuracy | 0 | 0.985 |

| SVM | Iris | Accuracy | 1 | 0.973 |

| ... | ... | ... | ... | ... |

You will also need a second file to store the information of the different metrics that you to make study. The file must have the following scheme:

- MetricName (string): Name of the quality metric used to evaluate the algorithm performace on the instance.

- Maximize (boolean): Boolean value to show whether the metric value in that row must be maximized or minimized.

| MetricName | Maximize |

|---|---|

| Accuracy | True |

| Loss | False |

| ... | ... |

The SAES library offers a range of functions categorized into three groups, corresponding to its three main features. The following links provide the SAES Tutorial and the SAES API documentation that includes a detailed list of features.

- Python: >= 3.10

pip install SAESWe recommend using uv for fast dependency management:

# Install uv

curl -LsSf https://astral.sh/uv/install.sh | sh

# Clone and setup

git clone https://github.com/jMetal/SAES.git

cd SAES

uv venv

source .venv/bin/activate # On Windows: .venv\Scripts\activate

uv pip install -e ".[dev]"python3 -m venv venv

source venv/bin/activate # On Windows: venv\Scripts\activate

pip install -e ".[dev]"For broader compatibility, environment files are provided:

# Using pip with requirements.txt

pip install -r requirements.txt

# Using conda with environment.yml

conda env create -f environment.yml

conda activate saesSAES supports deterministic seeds for reproducible research:

from SAES.statistical_tests.bayesian import bayesian_sign_test

from SAES.plots.histoplot import HistoPlot

# Bayesian tests with seed for reproducibility

result, _ = bayesian_sign_test(data, sample_size=5000, seed=42)

# Histogram plots with consistent jitter

histoplot = HistoPlot(data, metrics, "Accuracy", seed=42)See the reproducibility documentation for details.

SAES can run in headless mode (without display) for automated workflows, CI/CD pipelines, and server environments:

# Set matplotlib backend

export MPLBACKEND=Agg

# Run SAES commands

python -m SAES -ls -ds data.csv -ms metrics.csv -m HV -s friedman -op results.tex

python -m SAES -bp -ds data.csv -ms metrics.csv -m HV -i Problem1 -op boxplot.pngSee examples/headless_mode_example.py for a complete Python example or examples/headless_cli_example.sh for CLI usage.