SFU MSE capstone project Jan 2021-Aug 2021

Supervisor: Dr. Rad

Abdullah Tariq

Afnan Hassan

Alzaib Karovalia

Mohammad Uzair

Syed Imad Rizvi

https://www.youtube.com/watch?v=eHhP7St_r0k

The goal of this project is to build a self-driving vehicle (prototype), using computer vision and deep neural networks, to navigate in various environments. To achieve this goal, the project is divided into several milestones.

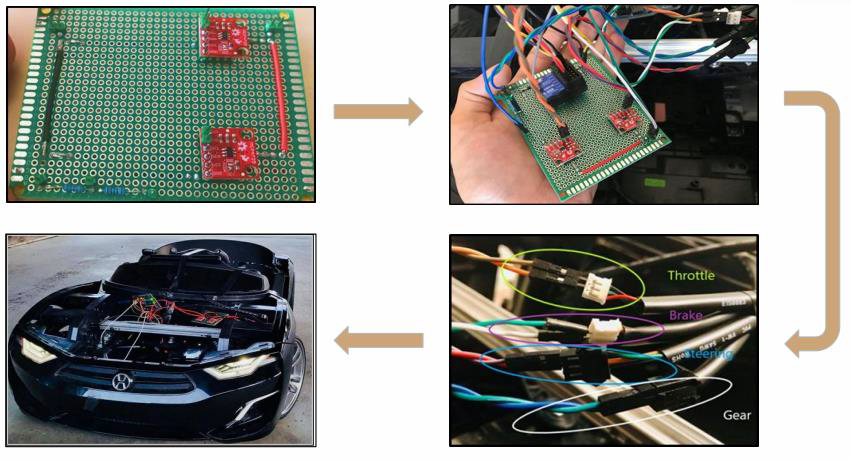

The prototype of the autonomous driving platform is installed in Henes Broon F8.

To hack the car, potentiometers on the steering, throttle and brake are replaced with DACs MCP4725. These DACs are then controlled via Arduino Uno. To wirelessly control the car, the Arduino is connected to a radio receiver. This receiver receives the input signal from the transmitter, which is controlled by the driver. Then these signals are sent to Arduino, which then maps it to generate the voltage between 1.1V to 2.2V (i.e., left to right) via DACs

Two different sets of data is collected/download to train the PilotNet (predicting steering) and YOLOv4-Tiny (detecting custom objects).

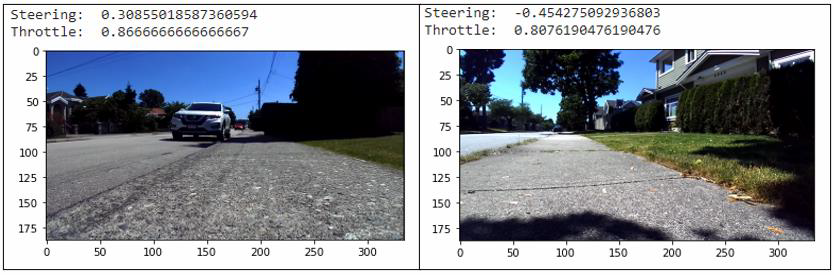

For the train PilotNet, and test our ADP system on the sidewalk, over 70,000 images were collected by driving the car around the block for a few weeks. Different pathways were chosen to get different data to train the model under different operating conditions. During the data collection, the PWM values from the receiver are read by Arduino. These values are then mapped for the DACs' input. These mapped values are sent to the OBC through serial communication. A Python script on on-board computer (laptop) records the frame input from the camera, and associates steering and throttle values from the serial port.

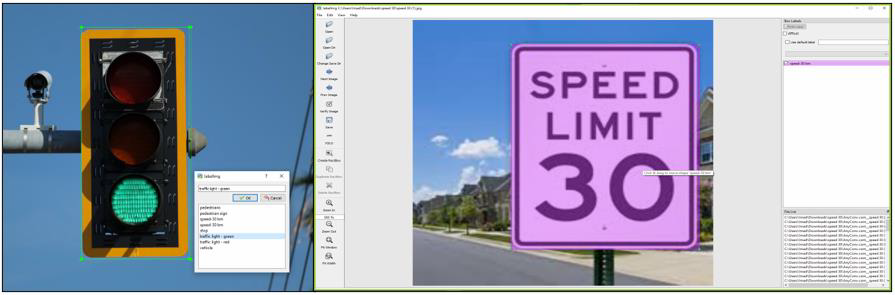

To train YOLO object detection model, predefined datasets from Kaggle and Google were downloaded. Based on the system requirements, custom classes were built to detect certain signs. These include traffic signs, stop signs, pedestrians, and so forth. Once these classes were created, the downloaded datasets were hand-labeled in accordance with the classes created. In total, over 1100 images were collected for pedestrians, vehicles, stop signs, and 30km/h speed signs in YOLO format.

The PilotNet, originally proposed by NVIDIA, consists of 9 layers; 5 convolutional layers, 3 fully connected layers, and one normalization layer. We modified the architecture of PilotNet with 5 convolution layers, and 4 fully connected layers.

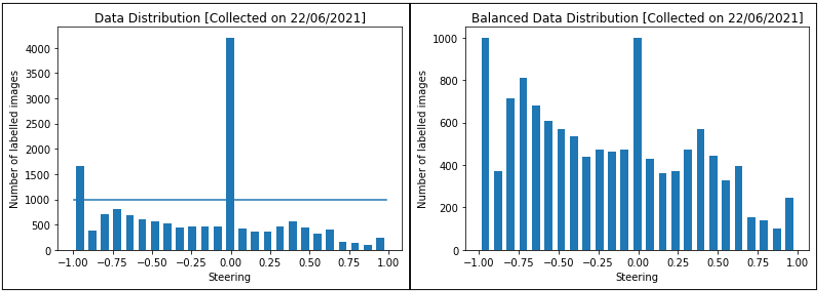

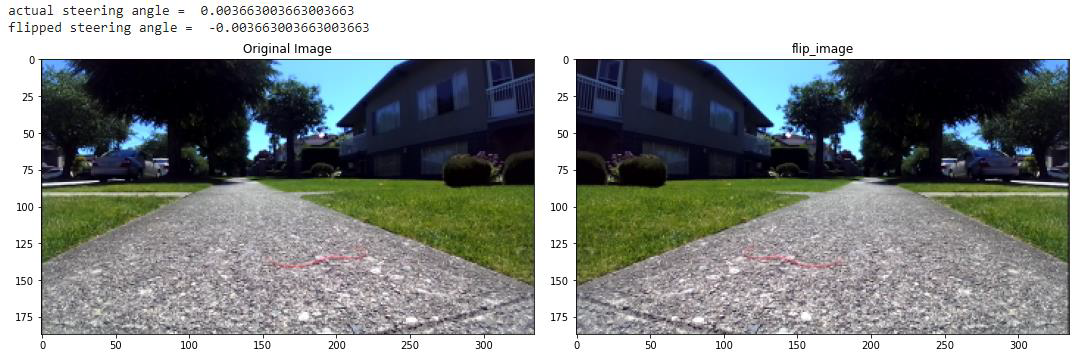

As the car is driven straight for most of the part, most of the collected data lie about steering angle 0. Training a neural network on such a dataset would result in biased prediction to drive straight all the time, therefore resulting in poor performance by the network. The excess data, above the threshold, from each bin, is randomly deleted. However, to better balance the overall dataset, each image is flipped and added to the dataset.

Before testing, we needed to make sure that the remote can override the AI mode in the car at any time, and that the user can take control whenever they please. Testing was carried out on the sidewalk with object detections such as vehicles, pedestrians, stop signs, and speed signs.

1- Additional cameras surround the car so our model has complete 360-degree awareness of its surroundings.Our team plans on adding at least 3 more cameras to cover each side of the vehicle.

2- Our team plans on training more data as the results of our ADP system are directly proportional to the amount of data trained.

3- Broaden the object detection dataset to include further traffic signs such as school zones, bicycles, one-way traffic, and merge signs

4- Adding an LSTM (memory) model with a CNN model that would enable time-dependent predictions for our ADP system.

“Broon F810 The Instructions Manual,” Manuals Library. [Online]. Available: https://www.manualslib.com/manual/1259961/Broon-F810.html#product-F830.

Redmon, "YOLO: Real-Time Object Detection", Pjreddie.com, 2021. [Online]. Available: https://pjreddie.com/darknet/yolo/.

M. Bojarski, L. Jackel, B. Firner and U. Muller, "Explaining How End-to-End Deep Learning Steers a Self-Driving Car | NVIDIA Developer Blog", NVIDIA Developer Blog, 2021. [Online]. Available: https://developer.nvidia.com/blog/explaining-deep-learning-self-driving-car/.