Understanding and Evaluating Commonsense Reasoning in Transformer-based Architectures PAPER

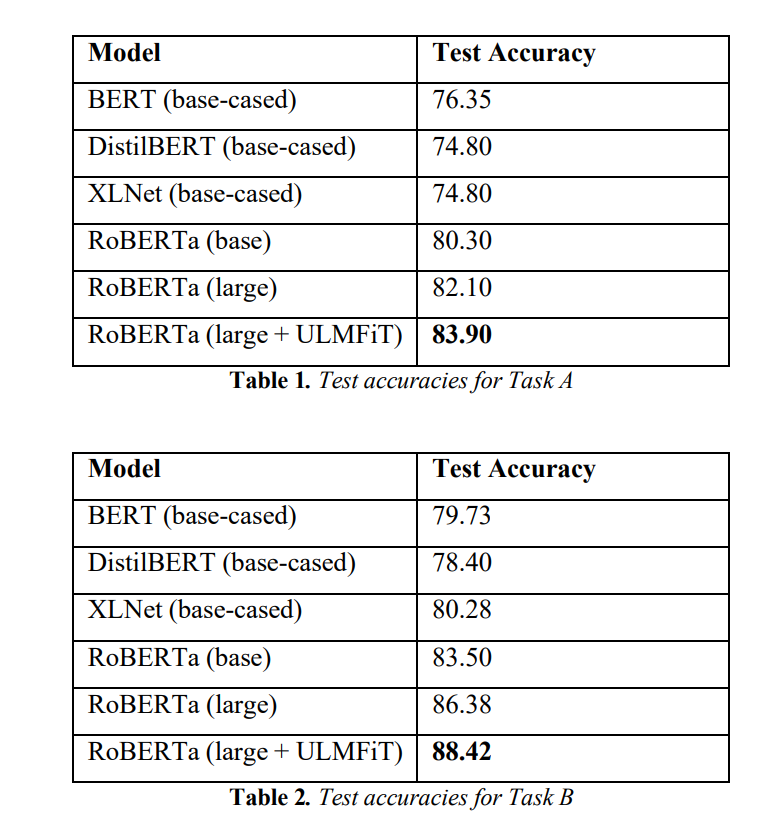

Ascertaining the reason for and identifying the differences between sensical and nonsensical statements is a task that humans are naturally adept at. NLP methods, however, might struggle with such a task. This paper aims to evaluate various transformer-based architectures on common sense validation and explanation task, methods to improve their performance, as well as interpreting how fine-tuned language models perform such tasks, using the attention distribution of sentences at inference time. The tasks entail identifying the nonsensical statement from a given pair of similar statements (validation), followed by selecting the correct reason for its nonsensical nature(explanation). An accuracy of 83.90 and 88.42 is achieved on the respective tasks, using RoBERTa (large) language model fine-tuned on the datasets using the ULMFiT training approach.