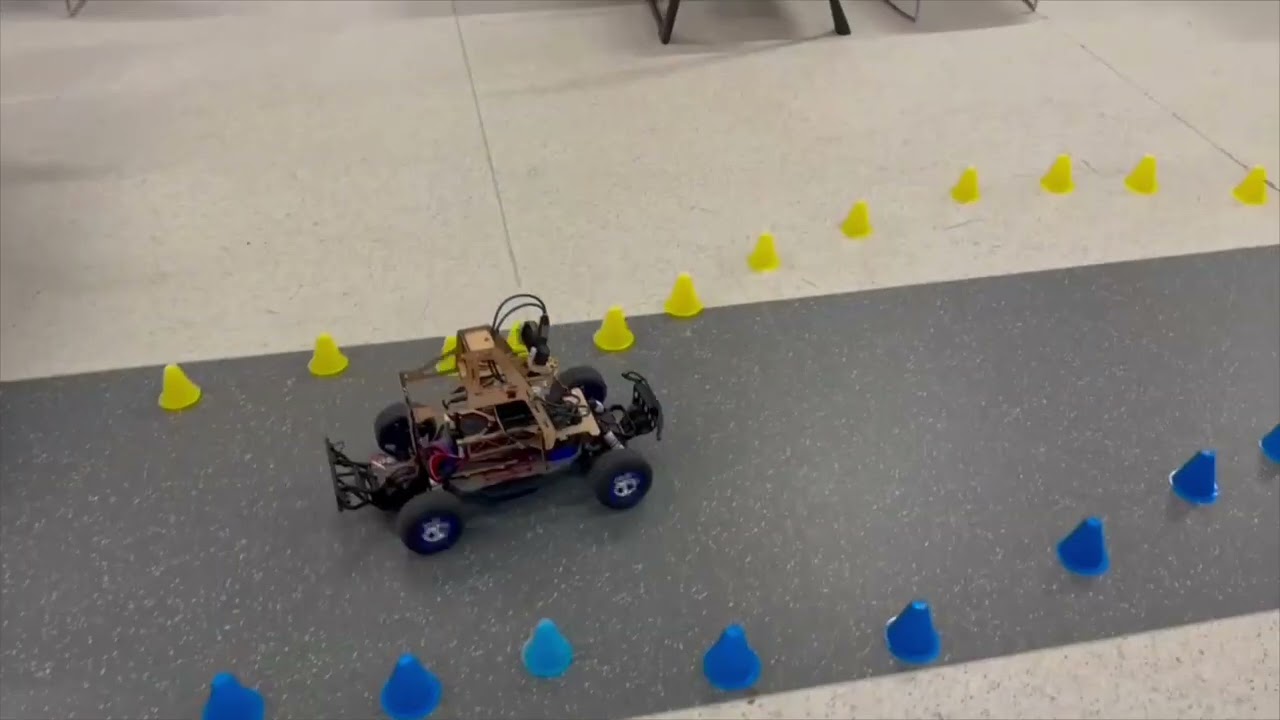

A complete autonomous driving stack for RC-scale vehicles featuring real-time cone-based lane keeping, ToF-based obstacle avoidance, and a comprehensive software-in-the-loop simulation environment.

Click the image above to watch the RC car navigating different scenarios

- Overview

- Key Features

- System Architecture

- Hardware Platform

- Sensor Suite

- Control Policies

- Simulation Framework

- Getting Started

- Usage

- Project Structure

- Contributing

- License

- Acknowledgments

This project implements a complete autonomous driving system for a Traxxas RC car platform, developed as part of the AI for Robotics (ELEN90095) course. The system combines:

- Real-time perception using YOLOv8-based cone detection and VL53L5CX Time-of-Flight sensors

- Intelligent control policies for lane-keeping and obstacle avoidance with PD controllers

- Software-in-the-loop simulation that mirrors real hardware for rapid policy development

- Production-ready deployment with Docker containerization and web-based monitoring

The vehicle successfully navigates cone-defined tracks while dynamically avoiding obstacles, demonstrating the integration of computer vision, sensor fusion, and real-time control.

| Feature | Description |

|---|---|

| Cone-Based Lane Keeping | Linear regression on detected yellow/blue cones with proportional steering control |

| Obstacle Avoidance | Multi-zone ToF sensing with velocity-aware PD controller and emergency braking |

| Gap Navigation | Intelligent maneuvering around obstacles when sufficient clearance exists |

| Sensor Fusion | Integration of camera, ToF, IMU, LiDAR, and wheel encoder data |

| Simulation Environment | Complete SIL testing with realistic sensor noise models |

| Batch Testing | Automated regression testing across multiple track scenarios |

| Web Interface | Real-time monitoring and control via browser-based dashboard |

| Docker Deployment | Containerized environment for reproducible builds |

┌─────────────────────────────────────────────────────────────────────────┐

│ PERCEPTION LAYER │

├─────────────────┬─────────────────┬─────────────────┬───────────────────┤

│ OAK-D Camera │ VL53L5CX ToF │ BNO08x IMU │ RPLiDAR A1 │

│ (YOLOv8) │ (4x4 Array) │ (9-DOF) │ (360°) │

│ 30 Hz │ 20 Hz │ 400 Hz │ 10 Hz │

└────────┬────────┴────────┬────────┴────────┬────────┴─────────┬─────────┘

│ │ │ │

▼ ▼ ▼ ▼

┌─────────────────────────────────────────────────────────────────────────┐

│ ROS2 MESSAGE LAYER │

│ /cone_points /tof_sensor /imu_sensor /scan /wheel_speed │

└─────────────────────────────────┬───────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────────────┐

│ POLICY NODE │

│ ┌─────────────────┐ ┌─────────────────┐ ┌─────────────────┐ │

│ │ Lane Keeping │ │ Obstacle │ │ Gap Navigation │ │

│ │ (Cone Fitting) │ │ Avoidance │ │ (Maneuvering) │ │

│ │ │ │ (PD Control) │ │ │ │

│ └────────┬────────┘ └────────┬────────┘ └────────┬────────┘ │

│ └────────────────────┼────────────────────┘ │

│ ▼ │

│ FSM State Machine │

│ (IDLE → ZERO → POLICY_ACTIVE) │

└─────────────────────────────────┬───────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────────────┐

│ ACTUATION LAYER │

│ /esc_and_steering_set_point_percent_action │

├─────────────────────────────────┬───────────────────────────────────────┤

│ ESC Motor │ Steering Servo │

│ [-100%, +100%] │ [-100%, +100%] │

└─────────────────────────────────┴───────────────────────────────────────┘

- Platform: Traxxas RC Car (1:10 scale)

- Wheelbase: 287mm

- Track Width: 235mm

- Max Speed: ~3.0 m/s

- Steering Angle: ±30°

| Component | Role |

|---|---|

| Raspberry Pi 4 | Main compute, ROS2 nodes |

| RP2040 Pico #1 | IMU interface (BNO08x) |

| RP2040 Pico #2 | Encoder & RC switch monitoring |

- AI4R-PIHAT: Raspberry Pi HAT for sensor/actuator routing

- AI4R-SENSORPCB: Centralized sensor breakout board

| Sensor | Model | Purpose | Key Specs |

|---|---|---|---|

| Camera | OAK-D | Cone detection (YOLOv8) | 120° FOV, 30 Hz, 0.5-10m range |

| ToF Array | VL53L5CX | Obstacle detection | 4×4 zones, 45° FOV, 20 Hz, ±5mm accuracy |

| IMU | BNO08x | Orientation & acceleration | 9-DOF, 400 Hz, auto-calibration |

| LiDAR | RPLiDAR A1 | Environment mapping | 360°, 10 Hz, 0.15-12m range |

| Encoder | Hall Effect | Speed estimation | 16.32 ticks/rev, period-based |

The lane-keeping system uses computer vision to detect yellow (left) and blue (right) cones, fitting linear trajectories to determine the road centerline.

# Simplified lane-keeping logic

if yellow_cones and blue_cones:

centerline = (yellow_fit + blue_fit) / 2

elif yellow_cones:

centerline = yellow_fit - ROAD_WIDTH/2

elif blue_cones:

centerline = blue_fit + ROAD_WIDTH/2

lateral_error = evaluate_centerline(look_ahead_distance)

steering = -Kp * lateral_errorKey Parameters:

ROAD_WIDTH: 1000mmLOOK_AHEAD_DISTANCE: 1000mmKp_STEER: 80.0

A velocity-aware PD controller monitors the ToF sensor array for obstacles, implementing graduated braking and emergency stop capabilities.

┌─────────────────────────────────────────────────────────┐

│ ToF 4×4 Grid │

│ ┌───┬───┬───┬───┐ │

│ │ 0 │ 1 │ 2 │ 3 │ ← Zones 4-11 used for detection │

│ ├───┼───┼───┼───┤ │

│ │ 4 │ 5 │ 6 │ 7 │ ← Center zones for forward sensing │

│ ├───┼───┼───┼───┤ │

│ │ 8 │ 9 │10 │11 │ ← Center zones for forward sensing │

│ ├───┼───┼───┼───┤ │

│ │12 │13 │14 │15 │ │

│ └───┴───┴───┴───┘ │

└─────────────────────────────────────────────────────────┘

Control Strategy:

- P (Proportional): Responds to distance error from target

- D (Derivative): Responds to rate of approach

- Emergency Override: Hard brake if distance < 0.8m

Key Parameters:

BASE_TARGET_DISTANCE: 1.2mEMERGENCY_BRAKE_DISTANCE: 0.8mKp_OBSTACLE: 80.0Kd_OBSTACLE: 40.0

When an obstacle is detected but sufficient clearance exists on one side, the system calculates navigable gaps and steers around the obstacle.

# Gap calculation

gap_on_left = |obstacle_y - yellow_cones_y| - obstacle_width/2

gap_on_right = |obstacle_y - blue_cones_y| - obstacle_width/2

if max(gap_on_left, gap_on_right) >= MIN_GAP_WIDTH:

steer_toward_larger_gap()

else:

apply_pd_braking()Key Parameters:

MIN_GAP_WIDTH: 0.35mMANEUVER_DISTANCE_THRESHOLD: 1.6mSTEERING_BIAS_GAIN: 80.0

The project includes a comprehensive Software-in-the-Loop (SIL) simulation environment that publishes identical ROS2 topics as the real hardware, enabling rapid policy development and testing.

- Identical Interface: Same topics and message types as hardware

- Realistic Sensor Models: Gaussian noise, dropout, detection probability

- Vehicle Dynamics: Bicycle model with tire slip and motor lag

- Performance Metrics: Automatic scoring and analysis

- Batch Testing: Run multiple scenarios automatically

- Video Recording: Auto-generated MP4 visualizations

| Scenario | Description |

|---|---|

simple_straight.yaml |

Baseline straight track |

s_curve.yaml |

Two connected curves (20m) |

s_curve_tight.yaml |

Tighter radius curves |

obstacle_course.yaml |

Multiple obstacles |

obstacle_goldilocks.yaml |

Single obstacle at optimal detection distance |

obstacle_immediate_wall.yaml |

Emergency braking test |

real_track_oct14.yaml |

Real measured track layout |

# Single scenario test

ros2 launch ai4r_pkg quick_test.launch.py \

scenario:=obstacle_goldilocks \

real_time_factor:=5.0

# Batch testing

ros2 run ai4r_pkg batch_tester.py \

test/scenarios/tracks/ \

--filter "obstacle" \

--timeout 120Results are saved to sim_results/ including JSON logs, performance plots, and MP4 recordings.

- Ubuntu 22.04 LTS

- ROS2 Humble

- Python 3.10+

- Docker (optional, for containerized deployment)

git clone https://github.com/yourusername/Autonomous-RC-Car-AI-Project.git

cd Autonomous-RC-Car-AI-ProjectFollow the official ROS2 installation guide.

cd ros2_ws

# Source ROS2

source /opt/ros/humble/setup.bash

# Install dependencies

sudo rosdep init # Only needed once

rosdep update

rosdep install -i --from-path src --rosdistro humble -y

# Build

colcon build

# Source the workspace

source install/setup.bashcd mcu_ws/build

cmake .. && make

# Outputs: ai4r-sensors-rp1.uf2, ai4r-sensors-rp2.uf2cd docker

docker-compose up -d

# Access web interface at http://localhost:8080For the sensor PCB parser node to work, grant read/write access to the serial ports:

echo 'KERNEL=="ttyACM0", MODE="0666"' | sudo tee /etc/udev/rules.d/ttyACM0.rules

echo 'KERNEL=="ttyACM1", MODE="0666"' | sudo tee /etc/udev/rules.d/ttyACM1.rules

sudo udevadm control --reload-rules

sudo udevadm triggerros2 launch ai4r_pkg ai4r_bringup_launch.py# Vehicle control only

ros2 launch ai4r_pkg traxxas_launch.py

# Sensors + cone detection

ros2 launch ai4r_pkg traxxas_imu_tof_cone_detector_launch.py

# Policy node only

ros2 launch ai4r_pkg policy_launch.py

# Camera streaming

ros2 launch ai4r_pkg camera_stream_launch.pyros2 run ai4r_pkg keyboard_teleop.py# List all topics

ros2 topic list

# View cone detections

ros2 topic echo /cone_points

# View ToF data

ros2 topic echo /tof_sensor_vl53l5cx

# Check topic frequency

ros2 topic hz /cone_pointsros2 bag record /cone_points /tof_sensor_vl53l5cx /imu_sensor /wheel_speed_m_per_secAutonomous-RC-Car-AI-Project/

├── ros2_ws/ # Main ROS2 workspace

│ └── src/

│ ├── ai4r_pkg/ # Core package

│ │ ├── scripts/ # Python nodes

│ │ │ ├── policy_node.py # Main control policy

│ │ │ ├── cone_detector_node.py

│ │ │ └── models/ # YOLOv8 models

│ │ ├── src/ # C++ nodes

│ │ ├── launch/ # Launch files

│ │ ├── config/ # Parameter files

│ │ ├── drivers/ # Hardware drivers

│ │ └── test/

│ │ ├── simulation/ # SIL framework

│ │ └── scenarios/ # Test tracks (YAML)

│ └── ai4r_interfaces/ # Custom messages

│

├── mcu_ws/ # RP2040 Pico firmware

│ ├── ai4r-sensors-rp1.cpp # IMU interface

│ ├── ai4r-sensors-rp2.cpp # Encoder/RC switch

│ └── [libraries]/ # BNO08x, VL53L5CX, servo

│

├── docker/ # Docker configuration

├── web_interface/ # Browser-based dashboard

├── pcb_files/ # KiCAD PCB designs

├── sim_results/ # Simulation outputs

├── real_runs/ # Hardware run recordings

├── SIMULATION_ARCHITECTURE.md # Detailed simulation docs

└── README.md # This file

cd /path/to/repository

sudo chmod 777 -R .- Check I2C connection:

i2cdetect -y 1 - Verify power supply (3.3V)

- Check sensor address conflicts

- Verify OAK-D is connected:

lsusb | grep Movidius - Install DepthAI:

pip install depthai - Check permissions:

sudo usermod -aG video $USER

Contributions are welcome! Please follow these steps:

- Fork the repository

- Create a feature branch (

git checkout -b feature/amazing-feature) - Commit your changes (

git commit -m 'Add amazing feature') - Push to the branch (

git push origin feature/amazing-feature) - Open a Pull Request

This project is licensed under the MIT License - see the LICENSE.txt file for details.

- Course: AI for Robotics (ELEN90095)

- Institution: University of Melbourne

- Platform: Traxxas RC Car

- Frameworks: ROS2 Humble, YOLOv8, Pico SDK

- Libraries: BNO08x, VL53L5CX, DepthAI